About the project

SpeechSTAR is a collaborative project between the University of Strathclyde, University of Glasgow, and Queen Margaret University, funded by the Economic and Social Research Council’s Secondary Data Analysis Initiative. SpeechSTAR aims to help Speech and Language Therapists, children with Speech Sound Disorders and their families by providing visual insight into how speech sounds are produced.

Speech animations

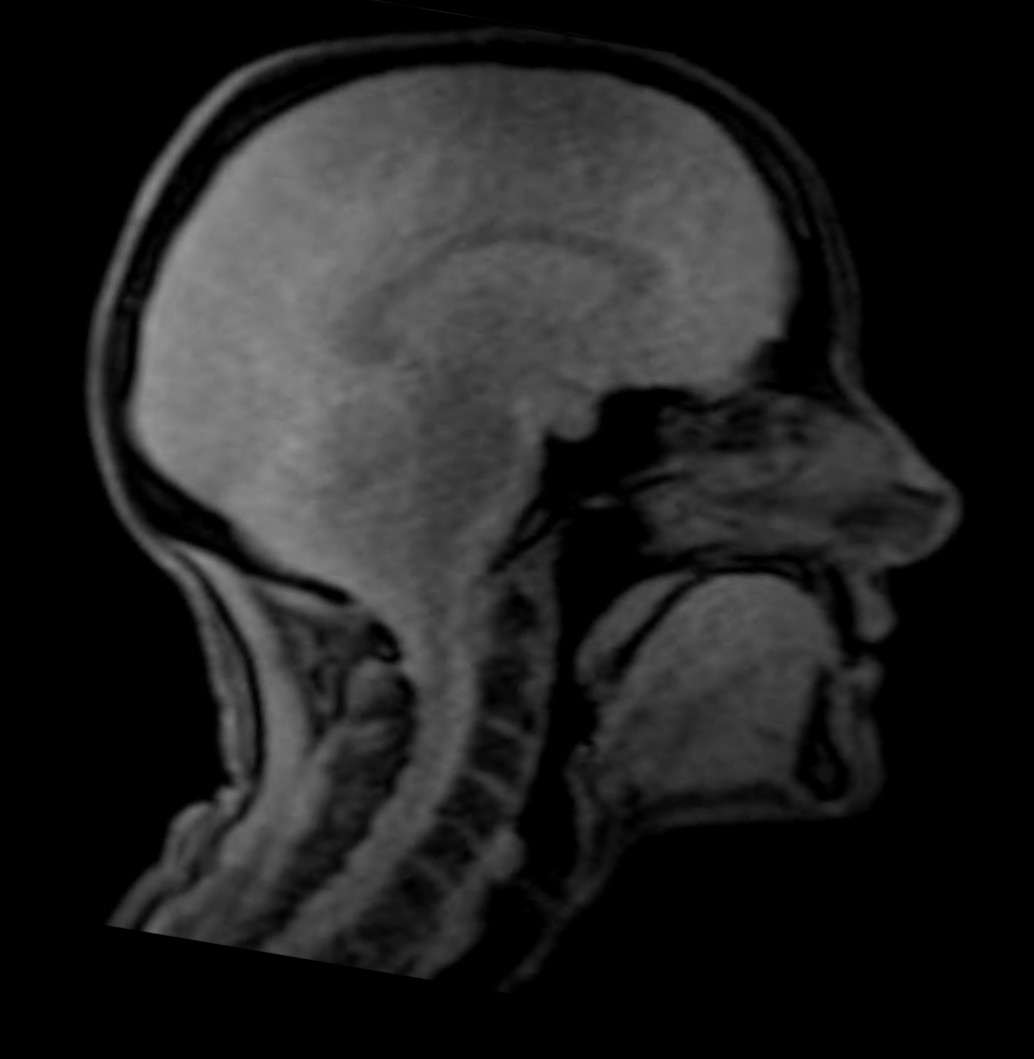

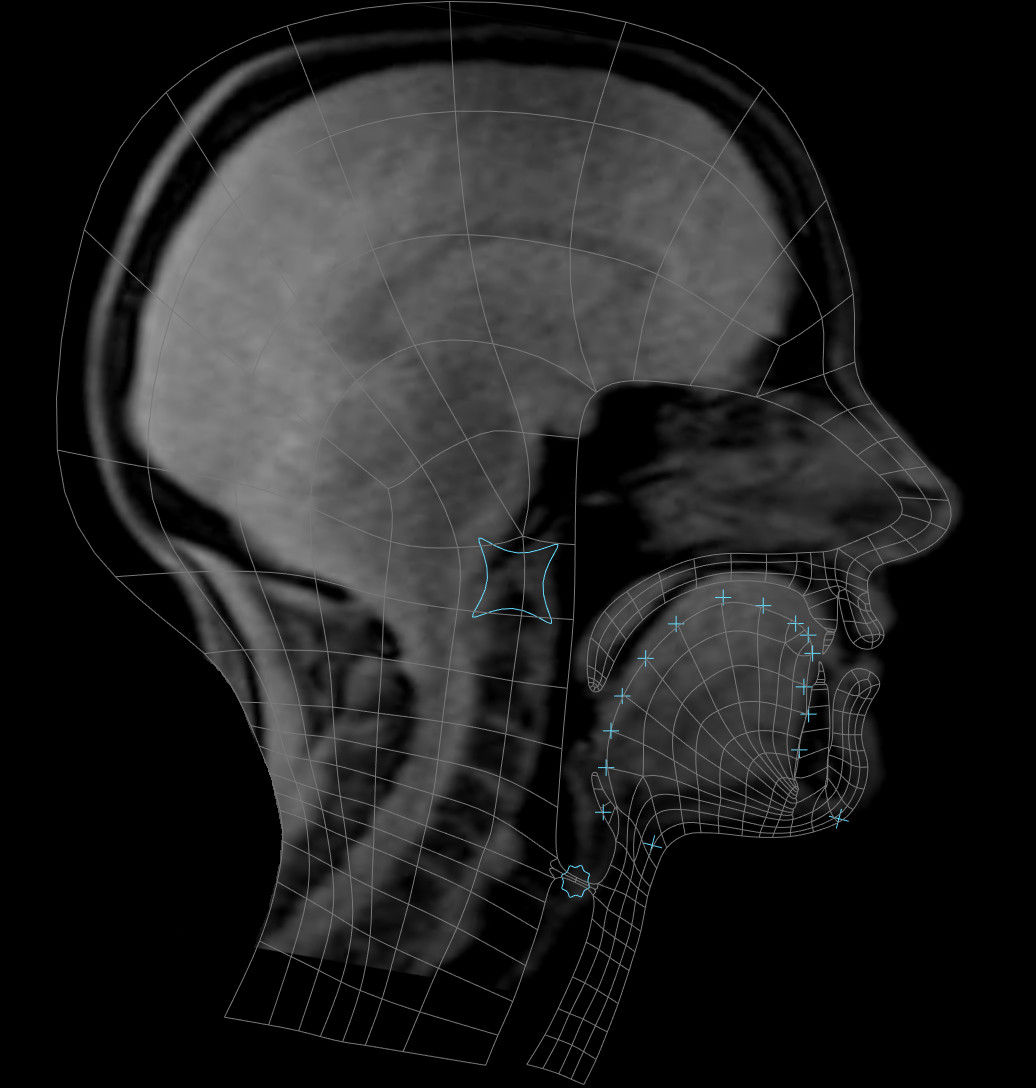

Speech animations on this site are based on real-time magnetic resonance imaging (MRI) recordings of phonetician and speech and language therapist Prof. Janet Beck.

Model talker Prof. Janet Beck

MRI still frame of the model-talker’s head

Animation rig superimposed on the MRI video frame

Ultrasound Tongue Imaging

Ultrasound is sound at higher frequencies than the ear can hear. Ultrasound can be used to image structures inside the body by sending sound waves into soft tissue and detecting the echoes that are reflected back. An ultrasound probe is placed under the speaker’s chin, and gel is added to the probe’s surface to allow good contact between the probe and skin. A lightweight headset can be used to keep the probe in position under the chin. The real-time movements of the tongue in profile view can be seen on a laptop screen.

a child wearing the ultrasound probe stabilising headset

a profile view of a child’s ultrasound-imaged tongue moving inside the head

Ultrasound Tongue Imaging compared to Magnetic Resonance Imaging

For over two decades, ultrasound has been used to image the moving tongue inside the head, in order to study speech, and also for speech therapy. The benefit of using ultrasound tongue imaging in speech therapy is that it allows us to see exactly what the tongue is doing during speech, and to use this information to help change tongue movements if children are having difficulty with specific speech sounds. On the SpeechSTAR web site, we show some examples of how ultrasound can be used in speech therapy and how it provides a visual guide for children to follow in addition to the descriptions and sound examples given by the speech and language therapist.

Project team

- Principal Investigator: Dr Eleanor Lawson (University of Strathclyde)

- Co-Investigator: Prof. Joanne Cleland (University of Strathclyde)

- Co-Investigator: Prof. Jane Stuart-Smith (University of Glasgow)

- Animator: Dr. Gregory Leplatre (Napier University)

- Web Developer: Brian Aitken (University of Glasgow)

- Model talker: Prof. Janet Beck (Professor Emerita, Queen Margaret University)

- Miriam Seifert, (Clinical advisor) North Bristol NHS Trust

Advisory panel

- Dr Sally Bates (Plymouth Marjon University)

- Dr Jonathan Preston (Syracuse University)

- Dr Claire Timmins (Strathclyde University)

- Dr Zoe Roxburgh (Speech Therapy Clinician, Houston Texas);

- Lisa Crampin (Yorkhill Hospital for Sick Children, Glasgow);

- Carolyn Hawkes (NHS Lothian)

Contact us

If you would like to leave feedback, or have a question about this resource, please get in touch with:

Dr. Eleanor Lawson (PI),

Speech and Language Therapy,

University of Strathclyde,

40 George Street,

Glasgow,

G1 1QE

Scotland, UK

Email: eleanor.lawson@strath.ac.uk

Prof. Joanne Cleland,

Speech and Language Therapy

University of Strathclyde

40 George Street,

Glasgow

G1 1QE

Scotland, U.K.

Email: joanne.cleland@strath.ac.uk

Prof. Jane Stuart-Smith,

Glasgow University Laboratory of Phonetics (GULP)/

English Language & Linguistics,

Glasgow G12 8QQ

Scotland, UK

Email: Jane.Stuart-Smith@glasgow.ac.uk

tel: +44 (0) 141 330 6852

Licencing information

This work is licensed under CC BY-NC-ND 4.0

You can copy and distribute materials on this site in any medium or format in unadapted form only, for non-commercial purposes only, and you must give appropriate credit to the creators.

© Lawson, Cleland and Stuart-Smith